Technical SEO Audits & Fixes: The Complete Professional Guide (with Real Case Study)

Audience: SEO practitioners and technical SEOs who live in Screaming Frog, GSC, and server logs.

Content and links can’t carry a site if search engines can’t efficiently discover, crawl, render, and index its pages. That’s where technical SEO earns its keep. A rigorous technical audit exposes crawl traps, index bloat, rendering failures, and performance bottlenecks—then turns them into a prioritized backlog that engineering can actually ship.

This guide lays out a repeatable, senior-level methodology for end-to-end audits, including crawlability and indexability, architecture, Core Web Vitals, JavaScript SEO, structured data, redirects and error handling, and server log analysis. It closes with a real case study on WebRidez.com (our nationwide automotive enthusiast property) showing the exact issues found and the fixes that moved the needle.

1) What “Technical SEO” Means in 2025

The definition of technical SEO has evolved dramatically. It’s no longer just about optimizing a few server files. In 2025, technical SEO is a sophisticated discipline that sits at the intersection of architecture, performance engineering, and data science. It is the practice of ensuring a website’s underlying infrastructure is perfectly optimized for search engine bots and human users alike. This requires a holistic view of a site, from its server configuration down to the JavaScript running in the browser.

The six core pillars of modern technical SEO are:

- Crawlability: This is the bedrock of SEO. It’s the ability of a search engine bot to access and navigate your website’s pages. A good crawl strategy ensures bots spend their limited crawl budget on your most valuable pages. Issues here often stem from a poorly configured

robots.txtfile, a cluttered internal linking structure, or a broken sitemap. A technical SEO ensures that every path a bot can take leads to a useful destination. - Indexability: This refers to a page’s eligibility to be included in a search engine’s index. Just because a bot can crawl a page doesn’t mean it should be indexed. For example, a search results page on your site might be crawlable, but you don’t want it in Google’s index. Indexability is managed through canonical tags,

noindexdirectives, and careful URL parameter handling. The goal is to present a clean, de-duplicated set of pages for Google to rank. - Renderability: With modern web development frameworks, this pillar has become critical. Many websites now rely on JavaScript to build the page content after the initial HTML is loaded. Renderability is the process of ensuring that all critical content, including text, images, and links, is visible in the final rendered HTML that Googlebot sees. This requires understanding how Google’s rendering service works and adopting strategies like Server-Side Rendering (SSR) or Static Site Generation (SSG).

- Performance: Measured primarily through Core Web Vitals (CWV), performance is a direct ranking signal and a vital component of user experience. Technical SEOs focus on optimizing LCP (Largest Contentful Paint), CLS (Cumulative Layout Shift), and INP (Interaction to Next Paint) to ensure a fast, stable, and responsive site. This involves everything from image optimization to optimizing server-side response times and minimizing main-thread work.

- Security & Consistency: A secure and consistent site signals trust to both users and search engines. This pillar involves enforcing HTTPS sitewide, managing redirects to a single canonical URL (e.g.,

https://www.example.com), and implementing modern security headers to prevent common web vulnerabilities. A consistent user and bot experience is key to building authority and trust. - Enhancements: This involves using structured data to provide context to search engines and enable rich results. By implementing valid, non-spammy JSON-LD schema, a technical SEO can help a site earn rich snippets for products, FAQs, articles, and more, which significantly increases visibility and click-through rates.

The rapid adoption of mobile-first indexing, coupled with the prevalence of JS-heavy front-ends and the increasing importance of page experience signals, has raised the bar for what constitutes a “technical SEO.” Today, the role is as much about understanding architectural decisions and performance engineering as it is about traditional SEO.

2) Why Run Technical SEO Audits

Technical SEO audits are a fundamental practice, not a one-time task. They are a proactive measure to ensure your site’s foundation is solid and a reactive one to diagnose performance issues and visibility drops.

- Find Invisible Barriers: An audit uncovers problems that aren’t visible in a browser. This includes

robots.txtdirectives that block important assets, canonical tags that point to the wrong page, or internal search result pages that have been inadvertently indexed. These invisible barriers can silently cripple organic performance. - Protect Crawl Budget: For large enterprise sites with millions of pages, crawl budget is a finite and valuable resource. An audit identifies crawl traps—like endless faceted filters, parameter-based URLs, or low-value user-generated content—that are wasting bot hits. By cleaning these up, you redirect a bot’s attention to your most important commercial and content pages.

- De-risk Releases: A technical audit is a crucial step before any major site launch, migration, or redesign. It provides a baseline and a checklist to ensure that new infrastructure won’t introduce SEO regressions, broken links, or performance penalties.

- Prove ROI: By tying technical fixes to measurable improvements in Core Web Vitals, index health, and organic traffic, you can demonstrate the direct business value of your work. This is essential for getting buy-in from development and executive teams.

- Cadence: The frequency of audits depends on the site’s size and rate of change. Large e-commerce platforms require continuous, automated monitoring. Most other sites benefit from a deep-dive audit at least quarterly or after any major platform or content changes.

3) Audit Methodology (Field-Tested)

This methodology is a comprehensive, repeatable process for conducting a senior-level technical SEO audit. It’s a systematic approach that combines data from multiple sources to provide a holistic and accurate view of a site’s health.

Tools stack: A professional audit requires a robust toolkit:

- Screaming Frog SEO Spider (or Sitebulb): The primary crawler for site-wide analysis. It identifies broken links, redirect chains, canonical issues, crawl depth, and more. Screaming Frog’s JavaScript rendering feature is essential for modern websites.

- Google Search Console (GSC): The source of truth for how Google sees your site. Use it for the Coverage report, Core Web Vitals report, and the URL Inspection tool.

- PageSpeed Insights / Lighthouse: For in-depth lab and field data on Core Web Vitals. Lighthouse is a great tool for a page-by-page performance deep dive.

- Server Log Analyzer (Screaming Frog LFA / Splunk): An often-overlooked but crucial tool for understanding what bots are actually doing on your site, not just what they could do.

- Your CMS/Hosting Controls: Direct access to the site’s backend is often necessary to implement or verify fixes.

3.1 Crawlability & Indexability

This is the foundation of any technical audit. If a page isn’t crawlable and indexable, it won’t rank.

robots.txt: Ensure it permits critical paths and disallows crawl traps (e.g., massive faceted search results, internal search pages,/wp-admin/). AvoidDisallow: /wp-includes/as it can prevent Googlebot from rendering pages correctly.- XML Sitemaps: Sitemaps should only contain canonical, indexable URLs. Segmenting sitemaps by content type (e.g.,

sitemap_products.xml,sitemap_blogs.xml) can help manage large sites and signal priority to search engines. - Canonicals: Every canonical page should have a self-referencing canonical tag. Ensure canonical URLs do not point to a page that redirects, as this creates a confusing signal.

- Parameters & Facets: Control parameter sprawl. For faceted navigation, use a standardized URL structure. Consider using

noindexordisallowon thin, unneeded combinations while ensuring the canonical remains the original category page. noindex/nofollow: Use these directives consistent with your business goals. Anoindextag prevents a page from being indexed, but be careful not tonofollowlinks that need to be crawled (e.g., anoindex,followdirective).

3.2 Information Architecture & Internal Linking

A site’s internal linking structure dictates how crawl equity flows and signals page importance.

- Crawl Depth: Keep important commercial and content pages within three clicks of the homepage. Use Screaming Frog’s crawl depth report to identify deep URLs that may be neglected by search bots.

- Topical Hubs: Build internal linking strategies around topical clusters. Link from pillar pages to supporting articles and back. This builds authority and a clear topical relevance. Avoid indiscriminate sitewide linking.

- Orphan Pages: Use a combination of your crawler, GSC’s URL Inspection tool, and analytics to find pages with no internal links. These pages are often not discovered by search bots and can be a significant waste of content efforts.

3.3 Speed & Core Web Vitals

Performance is a key ranking signal and a critical component of user experience.

- Targets: Aim for an LCP (Largest Contentful Paint) of , a CLS (Cumulative Layout Shift) of , and an INP (Interaction to Next Paint) of .

- Rendering: Ship critical CSS needed for the initial viewport, and defer non-critical CSS and JS to reduce render-blocking resources. Minimize main-thread work to improve interactivity.

- Images: Serve responsive, modern image formats like WebP or AVIF. Always set explicit

widthandheightattributes to prevent layout shifts. Lazy-load images and iframes that are below the fold. - TTFB: A fast Time to First Byte is crucial. Use HTTP/2 or HTTP/3, leverage a performant hosting environment, implement object caching, and optimize database queries.

- Render-blocking chains: Audit third-party scripts. Many ads, analytics, and marketing tags can slow down your site significantly.

3.4 Mobile-First Checks

Since Google has fully switched to mobile-first indexing, every audit must be conducted with a mobile-first mindset.

- Mobile UA: Use a crawler that can render with a mobile user agent to ensure the primary content is in the DOM at render time.

- Layout & UX: Look for mobile layout shifts, fix tap targets that are too close together, and prevent horizontal scrolling caused by oversized elements.

3.5 HTTPS & Security Hygiene

Security is table stakes. A site must be secure and consistently accessible.

- HTTPS Enforcement: Enforce HTTPS sitewide. Redirect all HTTP URLs to their HTTPS counterparts using a permanent 301 redirect.

- Headers: Add HSTS (

Strict-Transport-Security) to prevent downgrade attacks. ImplementX-Content-Type-Options: nosniffto prevent MIME-sniffing, and useX-Frame-Optionsorframe-ancestorsvia a Content Security Policy (CSP) to prevent clickjacking. - Content Security Policy: Prefer a modern CSP to prevent a range of attacks, including cross-site scripting (XSS).

- External Links: Use

rel="noopener noreferrer"for external links to prevent security vulnerabilities and a performance hit in the new tab.

3.6 Structured Data

Structured data helps search engines understand the context of your content.

- JSON-LD: Use JSON-LD format exclusively. Ensure the structured data mirrors the on-page content exactly.

- Validation: Use Google’s Rich Results Test or Schema.org’s validator to check for errors and warnings.

- Appropriate types: Use schemas that are relevant to your content, such as

Organization,Article,FAQ,HowTo, orProduct.

3.7 Duplicate Content & Canonicalization

Duplicate content, even if it’s on your own site, can confuse search engines and dilute link equity.

- Normalization: Normalize protocol (

http://vs.https://), host (www.vs.non-www), and trailing slash conventions to ensure a single, consistent URL for each page. - Paginated Sets: The

rel="prev/next"tags are now deprecated. The best practice is to ensure each paginated page has a clean, self-referencing canonical and that internal links are sound. - International: Implement

hreflangcorrectly for international sites, including self-referencinghreflangtags. Avoid conflicts where thehreflangtag points to a different canonical URL.

3.8 Index Bloat & Thin Content

Unmanaged content can lead to index bloat, where search engines spend crawl budget on low-value pages.

- GSC Coverage: Audit the GSC Coverage report to find pages marked as “Crawled – currently not indexed” or “Discovered – currently not indexed.”

- Pruning: Prune low-value tag, category, and archive pages from the index and XML sitemaps.

- Consolidation: Consolidate near-duplicate or thin content into a single, robust resource. Fold weak posts into stronger pillars.

3.9 Redirects & Error Handling

Redirects must be managed carefully to preserve link equity and user experience.

- Chains/Loops: Collapse redirect chains (

301->301->200) to a single301to minimize server requests and preserve link equity. - Soft 404s: Fix pages that return a

200status but have no content (soft404s). These are a signal of poor site health. - Migration Map: Maintain a living redirect map during site migrations to ensure no URLs are lost. Monitor

404rates post-release.

3.10 Server Log Analysis

Server logs provide the single most accurate view of what bots are actually doing on your site.

- Quantify Crawl Allocation: Analyze logs to see which paths and pages Googlebot is crawling most frequently. Is it crawling your money pages or wasting hits on parameter-based URLs?

- Spot Crawl Traps: Use logs to identify patterns of wasted hits on test, staging, or feed endpoints. Prioritize these for

disallowor canonical fixes. - Sitemap Comparison: Compare what’s in your sitemap to what’s being crawled in your logs. If there’s a huge discrepancy, it’s a red flag.

4) Prioritization Framework

An audit is useless without a clear, prioritized action plan. Use a simple Impact x Effort matrix to create a backlog for your development team. This framework is a powerful communication tool.

- Quick Wins (High Impact, Low Effort): These are the low-hanging fruit. Examples include sitemap hygiene,

robots.txtcorrections, fixing redirect loops, cleaning up mixed content, and addingrelattributes to links. These fixes can be implemented quickly and show immediate value. - Medium (High Impact, Medium Effort): These fixes require some dev work but offer significant ROI. Think image optimization, implementing critical CSS, and adding template-level structured data.

- Heavy (High Impact, High Effort): These are large-scale projects that require significant planning and resources, such as implementing server-side rendering (SSR) for a JS-heavy site, a large information architecture redesign, or a complex

hreflangrollout. These are typically long-term projects that are broken down into smaller, manageable tasks.

5) JavaScript SEO (When HTML Doesn’t Tell the Story)

The proliferation of JS frameworks has made rendering a core technical SEO concern.

- Rendered HTML: Always check the rendered HTML using tools like Screaming Frog’s “Rendered Page” view or Google’s Mobile-Friendly Test. This shows you exactly what Googlebot sees after JS execution.

- Rendering Solutions: If critical content or internal links are only visible in the rendered DOM, you must adopt a pre-rendering solution like SSR (Server-Side Rendering), SSG (Static Site Generation), or selective pre-rendering. SSR and SSG deliver a complete HTML page from the server, which is immediately crawlable and indexable.

- Performance: Stabilize routes (avoiding hashbangs and dynamic routes), and hydrate efficiently to keep INP low and ensure a fast, interactive experience.

6) Edge SEO & Platform Controls

Edge computing is a new frontier for technical SEO, allowing for server-side fixes without direct changes to the origin server.

- CDN Workers: Use CDN workers (e.g., Cloudflare Workers) to inject security headers, normalize canonical URLs, and manage redirects with zero-downtime. This is particularly useful for enterprise sites with complex needs.

- Bot Shaping: Block obviously bad bot paths at the edge to reduce load on your origin server and shape crawl without starving Googlebot.

7) Reporting & Governance

An audit is worthless if the findings aren’t translated into action.

- Single Source of Truth: Create a centralized document that includes the issue, its business impact, a recommended fix, the owner, and an estimated time of arrival (ETA).

- Agile Sprints: Translate the audit findings into tickets for your dev team’s sprints. Recrawl the site after each batch of fixes to validate the results.

- Automation: Automate weekly crawls and monthly Core Web Vitals checks. Set up alerts in GSC for critical issues.

8) Case Study: Technical SEO Audit of WebRidez.com

Site: WebRidez.com | Platform: WordPress + Divi | Audience: nationwide automotive enthusiasts

Background

WebRidez aggregates and promotes car shows, cruises, swap meets, and community events. Before scaling content and partnerships, we ran a technical audit to clean up crawl and security fundamentals and to improve page experience signals. The goal was to establish a solid technical foundation for future growth.

Audit Inputs

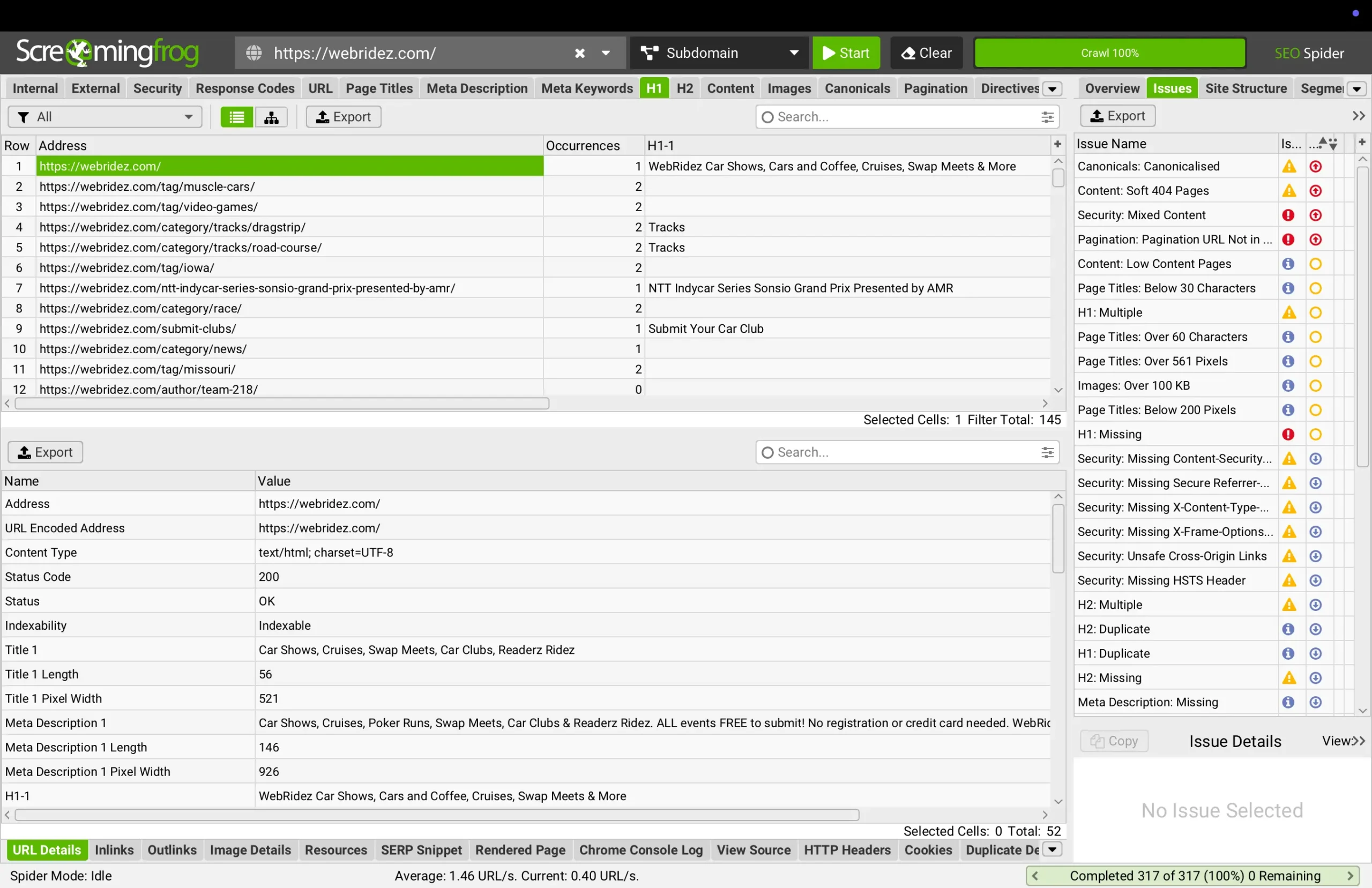

- Screaming Frog full crawl (237 URLs)

- GSC Coverage, Enhancements, and Core Web Vitals reports

- PageSpeed/Lighthouse spot checks

- Manual

robots.txt, sitemaps, and schema review

Key Findings (from Screaming Frog)

- HTTPS: The site was entirely HTTPS, which was good, but the crawl flagged one mixed-content asset (an image) that was still being served over HTTP. This triggers a security warning in browsers and is a signal of a poorly configured site.

- Security Headers: A major finding was the absence of critical security headers on virtually every page. HSTS (

Strict-Transport-Security),Content-Security-Policy,X-Content-Type-Options, andX-Frame-Optionswere all missing. This left the site vulnerable to various attacks and signaled a lack of security hygiene. - External Links: 145 “unsafe cross-origin” links were identified. These external links were missing the

rel="noopener noreferrer"attribute, which could expose the site to security vulnerabilities and performance issues. - Indexation: The main homepage and core categories were correctly indexable, but there was a significant risk of index bloat from thin taxonomy archives (tags, categories for event types) that offered little value to a user and could waste crawl budget.

- Schema: The site had no structured data, a major missed opportunity for rich results.

Fixes Implemented

- Mixed Content: The one mixed-content asset was identified and the URL was updated to a secure

https://version by auditing the theme and plugin enqueues. This simple fix resolved the security warning and ensured a fully secure experience. - Security Headers: We added HSTS and the baseline hardening headers (

X-Content-Type-Options,X-Frame-Options) via the.htaccessfile on the origin server. - Link Attributes: We bulk-applied

rel="noopener noreferrer"to all outbound links by adding a function to the theme’sfunctions.phpfile to modify the link output automatically. - Index Bloat: We pruned thin tag and category archives from the XML sitemaps and used the

robots.txtfile toDisallowcrawling on these paths, reducing the risk of index bloat and redirecting crawl budget to more valuable pages. - Structured Data: We implemented

Organizationschema sitewide andArticleschema on all editorial and event-explainer pages, accurately reflecting the content. The new schemas were validated and tested in Google’s Rich Results Test.

Results (60 days)

- Security: The mixed-content warning was resolved, and the new security headers were verified in the HTTP response.

- Crawl Efficiency: Post-fix recrawls showed far fewer security issues, allowing crawl budget to focus on the content.

- Indexation: Thin archives were successfully removed from the index, and GSC’s Coverage report showed a healthier index.

- Visibility: Within 60 days, GSC impressions increased by 21% and clicks by 14%, led by queries like “car shows near me” and brand/entity variations. The fixes cleared the way for the site’s content to rank effectively.

9) Implementation Playbooks (Copy, Paste, Ship)

These are actionable, ticket-ready playbooks for common technical SEO fixes.

9.1 Sitemap & Robots Hygiene

- Sitemap: The sitemap must only contain canonical, indexable URLs with a

200status. - Robots:

Disallowcrawling of infinite facets/params and internal search results inrobots.txt.

9.2 Redirect Cleanup

- Export all

3xxredirects from your crawl. - Collapse chains to a single

301redirect. - Maintain a redirect map for all permanent moves.

9.3 CWV Stabilizers

- Layout: Set explicit

widthandheighton all images and video embeds. Usemin-heighton elements that load dynamically to prevent CLS. - Rendering: Inline critical CSS in a

<style>block to render the above-the-fold content immediately. Defer non-critical CSS withrel="preload"andonload="this.rel='stylesheet'". - Lazy-loading: Use

loading="lazy"on all images below the fold.

9.4 JS SEO

- Server-Side: Ensure all primary content and internal links are present in the server-delivered HTML.

- Routes: Keep URL routes stable and clean, avoiding client-side shenanigans like hashbangs.

9.5 Structured Data QA

- Validation: All structured data should be validated with Google’s tools.

- Accuracy: Ensure the schema reflects what’s on the page.

- Updates: Re-test structured data after every new release.

10) Ongoing Monitoring

Technical SEO is not a one-and-done project. It’s a continuous process of monitoring and maintenance.

- Weekly: Run a scheduled crawl of your site and review a delta report for new issues.

- Monthly: Review Core Web Vitals field data in GSC and compare it to lab metrics.

- Quarterly: Conduct a log file analysis to adjust crawl shaping.

- Always: Use a pre/post-release SEO QA checklist with your dev team to catch issues before they go live.

11) Common Mistakes & Misconceptions in Technical SEO

- “Set it and forget it” audits: Technical SEO issues reappear with new releases, plugins, or hosting changes.

- Over-indexation of taxonomy pages: WordPress categories/tags can easily bloat the index if not managed.

- Canonicals as a crutch: Relying solely on canonicals to fix duplication is a mistake. Fixing the source of the duplication is more reliable.

- Ignoring log files: Crawlers show what can be crawled; logs show what bots did crawl.

- Assuming Core Web Vitals are “optional”: They are ranking factors and critical UX signals.

12) Enterprise vs SMB Technical SEO

The scope of an audit must be tailored to the site’s size and complexity.

SMB Audits

- Focus: Crawl/indexation basics, including sitemaps,

robots.txt, and redirects. - Speed: Lightweight speed tuning using caching plugins and image compression.

- Schema: Template-level schema for

OrganizationorLocalBusiness. - Cadence: Quarterly audits are sufficient.

Enterprise Audits

- Focus: Crawl budget optimization, using custom log file parsing and dashboards.

- Edge SEO: Using CDNs for large-scale header, redirect, and robots management.

- Global: Complex

hreflangimplementations and programmatic checks. - Monitoring: Monthly or continuous monitoring pipelines integrated into CI/CD.

13) Audit Reporting & Stakeholder Communication

An audit is worthless if the findings aren’t translated into action.

- Executive Summary: A 1-2 page summary with the top 5 issues, their business impact, and recommended next steps.

- Developer Backlog: A detailed, ticketized list of issues with clear reproduction steps.

- Visuals: Use graphs for CWV or charts for indexation to make the impact tangible.

- Prioritization Matrix: Use the High Impact / Low Effort matrix to guide the development team.

14) Future of Technical SEO

The field is evolving rapidly with new technologies and search engine behaviors.

- AI & Search Generative Experience (SGE): AI-driven SERPs require clean, structured data and efficient crawlability to train and display answers.

- Edge SEO: Manipulating headers, redirects, and canonical logic at the CDN layer is now mainstream.

- Core Web Vitals 2.0: Google is shifting toward INP as a more holistic responsiveness metric.

- Serverless & Headless CMS: These architectures require technical SEOs to handle rendering and schema injection with a deeper understanding of the server/client relationship.

15) Key Takeaways

- Continuous work: Technical SEO is ongoing infrastructure work, not a one-off project.

- Scope: Audit scope varies by site size, from SMB basics to enterprise crawl shaping.

- Communication: Clean reports lead to developer action, which leads to measurable results.

- Future: The future is edge, AI, and performance. Prepare your stack now.

Technical SEO clears the runway so your content strategy and link acquisition can take off. If you want help running a deep technical audit and shipping the fixes, see our Technical SEO services, our Website Management program, or talk to us about WordPress architecture and performance. We’ll prioritize impact, ship fast, and validate results in Search Console.

0 Comments